Similarly, this chapter is primarily concerned with the visual domain, and material on other modalities can be found in Chapters 3- 7.

The nonvisual modalities have been relegated to special-purpose peripheral devices. To date much of the design emphasis in VE systems has been dictated by the constraints imposed by generating the visual scene. Finally, gathered and computed information must be summarized and broadcast over the network in order to maintain a consistent distributed simulated environment. This representation must account for all human participants in the VE, as well as all autonomous internal entities. Next, a unified representation is necessary to coordinate individual sensory models and to synchronize output for each sensory driver.

Each sensory modality requires a simulation tailored to its particular output. An acoustic display may require sound models based on impact, vibration, friction, fluid flow, etc. For example, a haptic display may require a physical simulation that includesĬompliance and texture. To generate the sensory output, a computer must simulate the VE for that particular sensory mode. The auditory display driver, for example, generates an appropriate waveform based on an acoustic simulation of the VE.

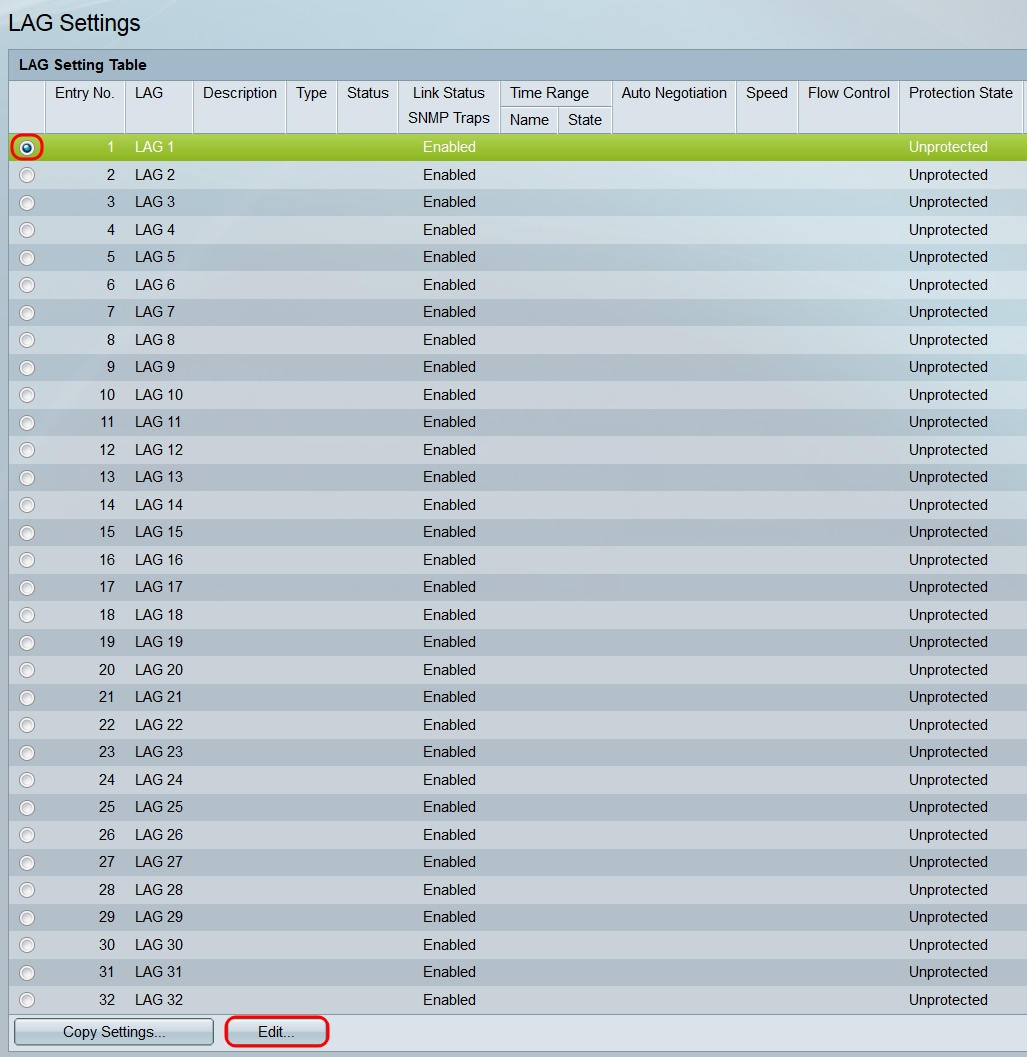

Artificial academy 2 windows 10 lag drivers#

These devices generate output to, or receive input from, the human as a function of sensory modal drivers or renderers. Beginning from left to right, human sensorimotor systems, such as eyes, ears, touch, and speech, are connected to the computer through human-machine interface devices.

Artificial academy 2 windows 10 lag registration#

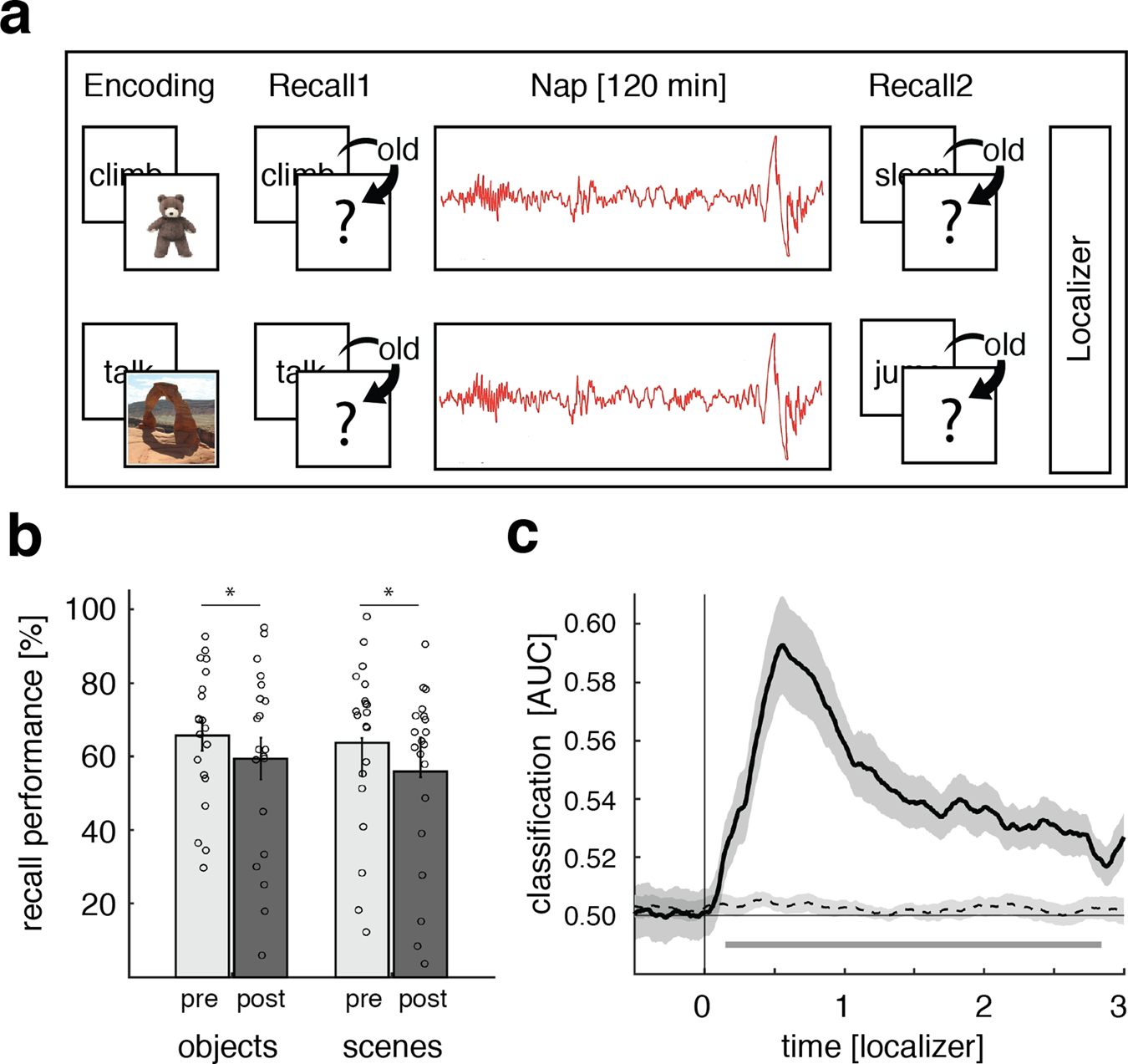

In Figure 8-1, three distinct classes of blocks are shown: (1) rendering hardware and software for driving modality-specific display devices (2) hardware and software for modality-specific aspects of models and the generation of corresponding display representations (3) the core hardware and software in which modality-independent aspects of models as well as consistency and registration among multimodal models are taken into consideration. One possible organization of the computer technology for VEs is to decompose it into functional blocks.

In this chapter, we focus on the computer technology for the generation of VEs. The human-machine interface hardware that includes visual and auditory displays as well as tracking and haptic interface devices is covered in Chaptersģ, 4, and 5. In this sense, VEs can be viewed as multimodal user interfaces that are interactive and spatially oriented. Multimodal user interfaces are simply human-machine interfaces that actively or purposefully use interaction and display techniques in multiple sensory modalities (e.g., visual, haptic, and auditory). A much more interesting, and potentially useful, way to view VEs is as a significant subset of multimodal user interfaces.

Limiting VE technology to primarily visual interactions, however, simply defines the technology as a more personal and affordable variant of classical military and commercial graphical simulation technology. The greater affordability and availability of these systems, coupled with more capable, single-person-oriented viewing and control devices (e.g., head-mounted displays and hand-controllers) and an increased orientation toward real-time interaction, have made these systems both more capable of being individualized and more appealing to individuals. Graphical image generation and display capabilities that were not previously widely available are now found on the desktops of many professionals and are finding their way into the home. The current popular, technical, and scientific interest in VEs is inspired, in large part, by the advent and availability of increasingly powerful and affordable visually oriented, interactive, graphical display systems and techniques. The computer technology that allows us to develop three-dimensional virtual environments (VEs) consists of both hardware and software. Computer Hardware and Software for the Generation of Virtual Environments

0 kommentar(er)

0 kommentar(er)